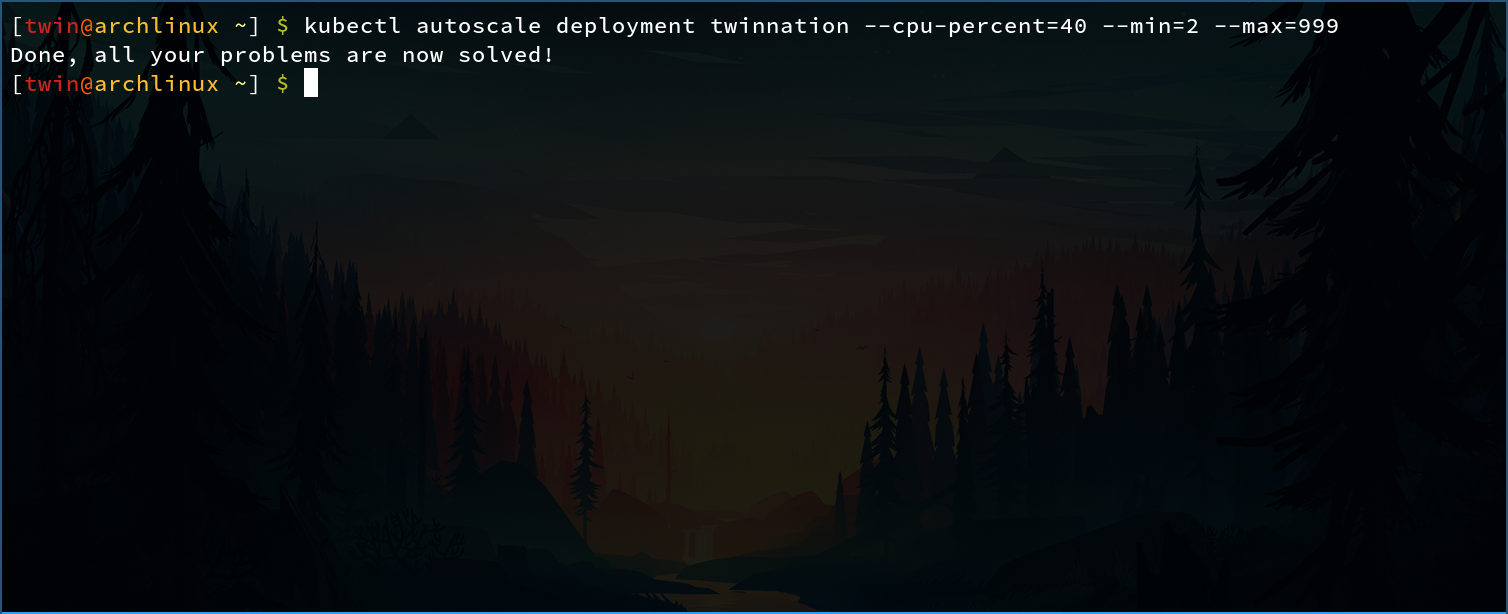

Shit Programmers Say: “Just Scale Up”

With cloud providers making scalability easier and easier, “just scaling up” has become the new easy way out despite the fact that it isn’t always the best option.

I love scalability, and I studied the topic in Kubernetes at length, but the simplicity offered through its automation makes it easy to hide bad design decisions, whether on purpose or not.

This is especially easy to overlook due to all the efforts put in by cloud providers, allowing users to add a shard or a replica with the click of a button, or even configure auto scaling and never have to worry about it again.

Granted, some do have the luxury of saying “it doesn’t matter if we quadruple our costs”, as a software engineer, I believe it’s important to keep performance in mind when you write code, even if you’re told that you can just scale it up and forget about it.

Let’s say you’re presented with the following scenario:

You know that your traffic (read) is going to increase tenfold in the very near future, but your current infrastructure is barely sufficient to handle your current load.

Your current infrastructure is made up of a stateless application that retrieves data from a database, and while your stateless application will have no problem scaling up and down to meet demand, your database doesn’t have an auto scaling feature.

The simplest conclusion to draw is, if a single database replica allows the aforementioned stateless application to handle N requests per second, then 10 replicas would allow you to handle 10N requests per second, right? Yeah, probably, but there are alternatives.

There are many things that can be done to improve performance, the most common strategies are: - Caching - Rate limiting - Bulk requests - Benchmarking to remove unnecessary memory allocation - Trying other libraries than the ones you’re currently using in hope that one of them is more performant

(The latter is more of an act of desperation than an actual strategy)

Of the strategies listed above, caching and bulk requests are both options that could be leveraged, however, bulk requests are not suitable for all use cases. For example, if a single request made to the stateless application results in a single transaction on the database, then batching queries isn’t really an option, unless you can batch queries from multiple separate client requests, but that might increase latency. Contrarily, if a single request made by a client to the stateless application results in dozens of transactions to the database, batching these requests together might give you a significant performance improvement. For the sake of simplicity, we’ll just focus on the caching strategy for our scenario.

Caching is by far the best way to improve performance for our problem, because not only can we avoid having to add several database replicas, but assuming there’s some processing involved by the stateless application once said data is retrieved from the database (e.g. sorting or filtering), caching that already processed data will not only save us from the costs that would be incurred by the additional 10 read-only database replicas, but it would also reduce the resources (cpu, memory) used by the stateless application to process a client request.

The downside of caching is, well, it’s not always simple to implement.

If a product is sold out, and you’re returning an entry from the cache that says it isn’t sold out, you might end up having unhappy customers when the payment fails because the payment API queries the database directly to check for availability. Moreover, if you end up having to spend the cost of the 10 database replicas in customer support instead, picking the simplest option may have been the right approach, at least business-wise. Designing the relationship between your systems, a database and a cache isn’t always easy, especially if you have to deal with reads and writes.

On one hand, having a database instance with 10 read-only replicas will be expensive long-term.

On the other hand, having a single database instance with a distributed cache that can be leveraged by several replicas of our stateless application will be cheaper long-term, but more expensive short-term, as it needs to be implemented.

It may seem like I’m hinting at the fact that taking the easy way out (just scaling up) has its benefits, but actually, the real issue often lies with where you draw the line between “let’s just scale up one more time” and “I don’t think we can keep adding replicas like this”.

If you make scaling up to deal with problems part of the norm, then you might end up with something like 100 database replicas, and it’ll seem a lot more acceptable to just bump that number up at the first sight of increase in latency, for reasons like “we’ve done it before” and “it’ll just increase the cost by 1%”, when really, you should be asking yourself “why are we still doing that?” and “why are we paying for the other 99%?”.